I've started to write this blog several times over the past month, but have been stopped each time because I didn't have the right approach. The challenge is that, like a lot of questions, the answer is "it depends."

I'm going to try to give as specific of an it depends answer as possible to the question "What is the best way to configure or use vNUMA to make sure I get the best performance?"

You already know that the answer is "it depends", and so I will provide some of the reasons as to why it depends.

NUMA architecture places memory with each CPU on a system to create NUMA nodes. Each of today's CPUs have multiple cores which results in a NUMA node with a given number of cores and RAM. For example, Nehalem-EX systems have four sockets each with 8 cores for a total of 32 cores. If this system had 256GB of RAM total, it would mean that each socket had 64GB of RAM.

The way to get the best overall performance from the system would be have multiple VMs that each fit neatly within the NUMA nodes on the host. The ESX scheduler will automatically place the VMs and their associated memory each on a NUMA node in this case. In the case where the workload fits within a VM that is equal to or smaller than a NUMA node, the only thing that has to be NUMA aware is ESX. This diagram shows four VMs each with 8vCPUs on a Nehalem-EX system, each fitting nicely into it's own NUMA node.

What if your VM needs to be bigger than a NUMA node? One of the great new features in vSphere 5 is vNUMA or the ability for NUMA to be presented inside the VM to the guest OS. With the new "Monster VMs" of up to 32 vCPUs this capability really comes in handy. It allows for the guest to be aware of the NUMA on the host and make intelligent decisions about memory usage and process scheduling.

Without vNUMA, the OS and apps are not aware of the NUMA architecture and will just treat the vCPUs and vRAM as one big pool and assign memory and processes. This will result in something like this:

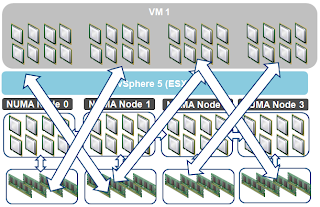

But with vNUMA the VM can present the underlying NUMA architecture inside the VM. This allows for the OS and application to make better decisions. If the the application is NUMA aware and does a good job of optimizing things it can end up looking something like this:

The reason that I can say it will only look something like this, is that it really greatly depends on the application's NUMA support and implementation. vNUMA is only an enablement of NUMA inside the guest. Some applications can be written in a way to easily take advantage of NUMA and keep everything nice and neat on seperate nodes. Some applications simply don't allow for this due to the way they need to function, so there could still be lots of non-local memory accesses for processes. This is true for both physical and virtual environments.

To summarize - If your VM will fit in a single NUMA node then you don't need vNUMA. If it needs to be bigger than a NUMA node, then you should most likely configure vNUMA to match the underlying hardware NUMA as makes sense. You will see a performance improvement with vNUMA if your application is NUMA aware (this is the it depends part).

Todd

Running on Isla Mujeres Again

12 years ago

1 comment:

Just wanted to mention that on vSphere 5 vNuma is not enabled by default for 8 vCPU Virtual Machines. Its becomes "auto" enalbed after you provision more more then 8 vCPU to a given Virtual Machine. I could be wrong but I know I even recall this being referenced as well.

Excerpt from Page 39 of the Perf Best practices here:

By default, vNUMA is enabled only for virtual machines with more than eight vCPUs. This feature can

be enabled for smaller virtual machines, however, by adding to the .vmx file the line:

numa.vcpu.maxPerVirtualNode = X

(where X is the number of vCPUs per vNUMA node).

http://www.vmware.com/pdf/Perf_Best_Practices_vSphere5.0.pdf

Post a Comment